Augmented Reality

Design and development of an augmented reality vehicle software infrastructure (IV-AR)

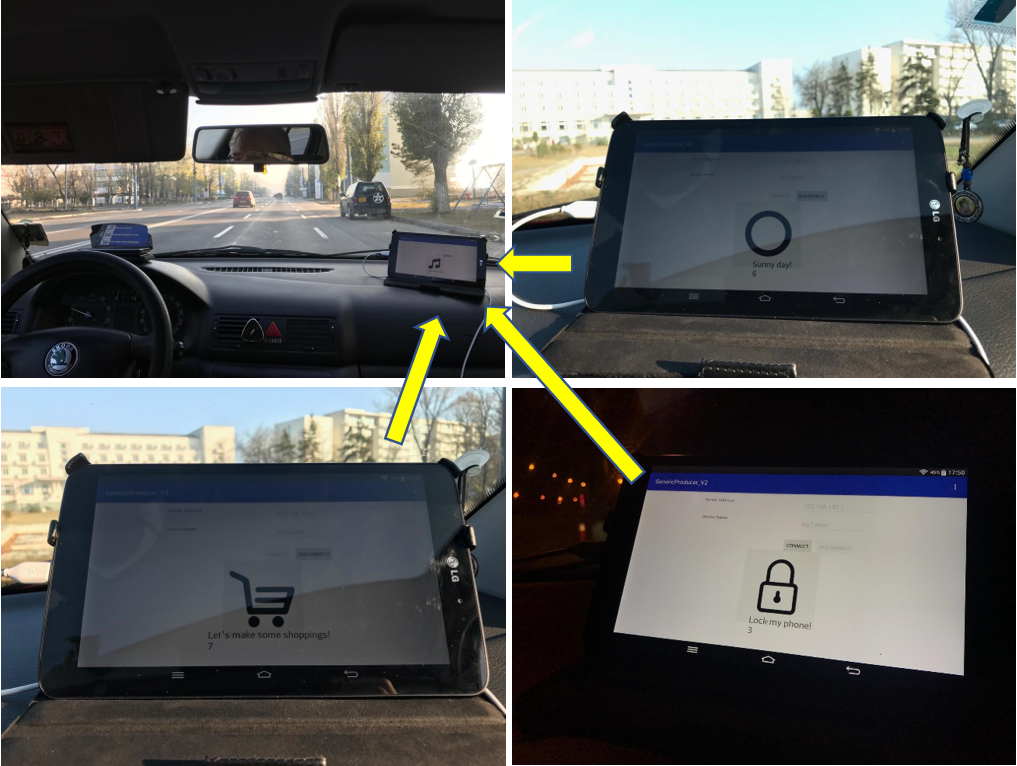

Digital Data Transfer Techniques (I)

Design and implementation of digital data transfer techniques between user's smart personal devices and In-Vehicle Inf. Systems (IVIS)

Digital Data Transfer Techniques (II)

Design and implementation of digital data transfer techniques between IVIS and the user leaving the vehicle